The sequel to Google’s AI model Gemini with new features in version 2.0. In this article, we will review the innovations in Google’s new AI model “Gemini.”

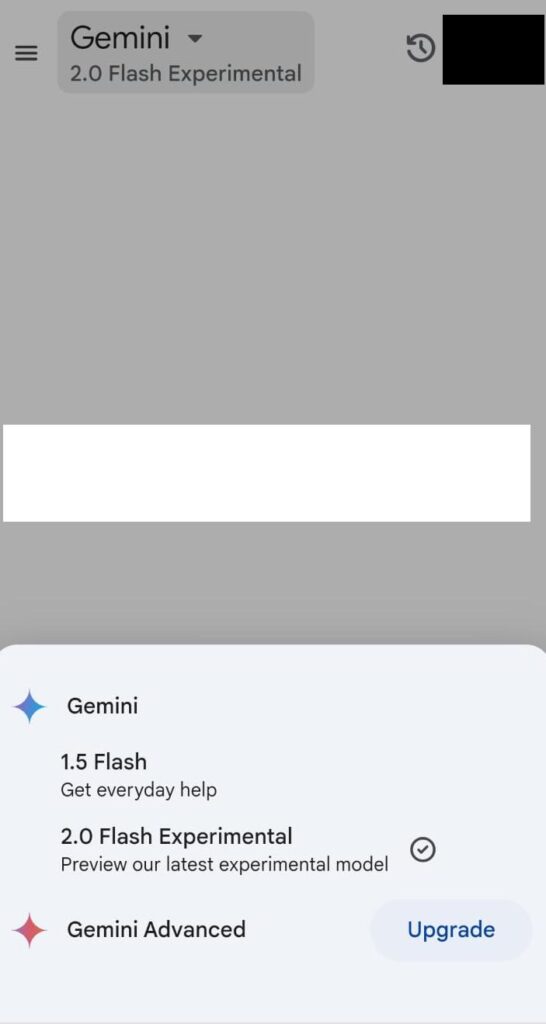

Google announced the new version of Gemini 2 with improvements to existing features and the addition of new features: image creation, audio output, Google search, and map usage. Google released an experimental version, Gemini 2.0 Flash, with higher performance and lower retrieval time. Developers can build using the Gemini API via Google AI Studio and Vertex AI platforms. Users using the basic version of Gemini and the ADVANCED version can choose the new version via the desktop dropdown menu.

The experimental version of Gemini 2.0, called Gemini 2.0 Flash, continues the improvements of Flash 1.5. The performance is enhanced, with faster response times. Flash 2.0 outperforms Gemini Pro in speed by a factor of two. The new features of Gemini Flash 2.0 include the ability to generate images, video, and audio with multiple models. It is possible to create original images from a mix of text and multilingual audio, convert text to speech (TTS), use Google search tools, write code, and utilize third-party functions defined by the user.

A key improvement in Gemini 2.0 Flash is its multimodal thinking and understanding of long-range contexts. The chat can track and plan complex instructions, read functions, use native tools, and improve latency. All tools work together to enable model enhancement.

In the new version of Gemini 2.0, there was an improvement to the “Astra” project: It is now possible to engage in dialogue in multiple languages and mix languages simultaneously. There is a better understanding of the dialogue in the new version. With Gemini 2.0, you can use Google search, Lens, and Maps. Gemini’s memory is stored for longer periods. The memory is saved for up to 10 minutes and can recall past conversations, thus improving the interaction between the user and Gemini. The conversation speed has improved, and delays have decreased. A more continuous and natural conversation between the user and Gemini is possible.

Google is working on making the Astra project available on their app and smart glasses.

With Gemini 2.0, you can create agents capable of performing various actions in video games. A video game player can receive suggestions on what to do next in real-time, based on actions on the screen.

Google is working to integrate Gemini 2.0 technology into the physical world by implementing spatial thinking capabilities in robotics.

Project Mariner: Project Mariner is a prototype built with Gemini 2.0 that explores the future of human-AI interaction. The prototype can bring and understand information from your browser, including understanding pixels and web content like text, code, images, and forms. It can then use this information through a Chrome extension to complete tasks for you.

Jules: Jules is an experimental AI-powered code agent that directly integrates with the GitHub platform. The agent can handle problems, develop programs, and execute them.

Gemini users worldwide can access the experimental Flash 2.0 chat version via desktop or mobile, and soon, also via the app.

Conclusion: Gemini 2.0 shows significant improvements over the previous version of Gemini and creates competition for AI competitors in the market. The advancements in image creation, voice generation, and video creation are just a small part of what the new version offers, and it seems that Gemini will soon be a part of every area of our lives.